We have been distracted by ridiculous arguments and fabricated “wars” for too long. We have been distracted by thinking that Google is Microsoft and Apple is Apple in a doomed fight already fought 20 years ago.

But that is not the fight we should be caring about at all. The fight we should be talking about, but aren’t, is the fight between mobile device makers and the carriers. This is the only real fight that matters.

Why should we care? Because carriers have been standing in the way of excellent user experiences for a long time. For years, Palm and HTC and Nokia and RIM have been kowtowing to the carriers. Carriers sell all the devices and the services, decide what software is available and what isn’t, decide what you can do with the device you paid $3000 or more for (over a two year contract). And who is punished? We, the consumers, with lousy service and controlled devices with crappy experiences.

I’ve been in this business for 13 years now. 3 years ago I had lost faith… until the iPhone. It wasn’t Apple’s designs or devices or user interfaces that excited me as much as it was their revolutionary business model (although the former excited me, too). Apple controls what apps are installed. Apple controls where the device is sold. Everyone — Apple, AT&T, developers — gets a cut of the revenues and the consumer gets an amazing experience and exceptional support.

I was just as equally excited when Google announced Android. The two most powerful companies in tech could surely go up against the four major carriers, reducing them to what they should be: regulated pipe providers just like your gas and electric company. And maybe, I thought, this will get Nokia and RIM to finally grow a pair and butt heads with the carriers. (Didn’t happen as RIM’s own mobile app store is still not pre-installed on their devices.)

But this pipe dream is being crushed quickly. The carriers, after giving up ground initially, are fighting back. They are using Android’s openness against the company. The carriers refuse to carry the Nexus. Verizon cuts exclusive deals with Skype. Slowness in “approving” new Android OS releases. AT&T locked devices from side-loading and the removal of the Google Marketplace. Secret (and ridiculous) deals on net neutrality. And now, insult to injury to Google who expected to make most of their money from selling ads like they do on the web, removing Google Search in favor of Microsoft Bing as the only and default search option on certain Android-based smartphones.

My goal here is to re-focus the conversation, put the attention back where it belongs. This is war. And this war will go nothing like Apple v. Microsoft. This is about who controls the experience; who gets to interact with the customer.

The stakes are a lot higher.

- Share this:

- StumbleUpon

- Press This

- Digg

Possibly related posts: (automatically generated)

Explore posts in the same categories: Favorites, Mobile/Smartphone, Technology (General)

This entry was posted on September 14, 2010 at 7:42 am and is filed under Favorites, Mobile/Smartphone, Technology (General). You can subscribe via RSS 2.0 feed to this post's comments. You can comment below, or link to this permanent URL from your own site.

I used the following custom PS1 to show a sequence ID for my next command on the prompt:

[\033[01;34m]! [\033[01;34m]\w $ [\033[00m]

For the past few days, I found it always start with 1000. Then I realized there was a upper limit of 1000 commands to be stored in the history file, and I wanted to raise that limit.

This wiki contains a really great summary of the important items relevant to history management in bash. Take home messages include

- HISTFILE specifies the file to use for history storage.

- HISTFILESIZE limits how many entries will be persistentized in the file system. The file will not grow beyond that. Upon set, the file is truncated immediately if if it is larger than this size.

- HISTSIZE limits how many records to be kept in memory. On a busy day, if your session grows beyond that number of entries, it will be thrown away in memory. This can be larger or smaller than HISTFILESIZE.

- PROMPT_COMMAND is the command to run before showing prompt. It is a trick that you can use to show mbox or calendar event notification, for example.

- shopt -s can be used to set bash options after the session has started. One option, histappend sets the history to be appended rather than overwrite on every session termination. Like someone else, this does not have any effect on my machine.

- history -n can be used to load “new” entries into the memory. It might not work reliably if you switched HISTFILE. You can use history -r instead to discard the entries in the memory and restart from those in the persistent file.

A novel use of these tricks cay be keep a HISTFILE for each month. To avoid an empty start on the 1st of each month, you can copy the last 1000 lines from last month to start, for instance.

Might, or might not, depending on one's purposes -- for example, if

one's purposes include a "sociological study" of the Python community,

then putting questions to that community is likely to prove more

revealing of informaiton about it, than putting them elsewhere:-).Personally, I gladly took the opportunity to follow Dave Thomas'

one-day Ruby tutorial at last OSCON. Below a thin veneer of syntax

differences, I find Ruby and Python amazingly similar -- if I was

computing the minimum spanning tree among just about any set of

languages, I'm pretty sure Python and Ruby would be the first two

leaves to coalesce into an intermediate node:-).Sure, I do get weary, in Ruby, of typing the silly "end" at the end

of each block (rather than just unindenting) -- but then I do get

to avoid typing the equally-silly ':' which Python requires at the

_start_ of each block, so that's almost a wash:-). Other syntax

differences such as '@foo' versus 'self.foo', or the higher significance

of case in Ruby vs Python, are really just about as irrelevant to me.Others no doubt base their choice of programming languages on just

such issues, and they generate the hottest debates -- but to me that's

just an example of one of Parkinson's Laws in action (the amount on

debate on an issue is inversely proportional to the issue's actual

importance).One syntax difference that I do find important, and in Python's

favour -- but other people will no doubt think just the reverse --

is "how do you call a function which takes no parameters". In

Python (like in C), to call a function you always apply the

"call operator" -- trailing parentheses just after the object

you're calling (inside those trailing parentheses go the args

you're passing in the call -- if you're passing no args, then

the parentheses are empty). This leaves the mere mention of

any object, with no operator involved, as meaning just a

reference to the object -- in any context, without special

cases, exceptions, ad-hoc rules, and the like. In Ruby (like

in Pascal), to call a function WITH arguments you pass the

args (normally in parentheses, though that is not invariably

the case) -- BUT if the function takes no args then simply

mentioning the function implicitly calls it. This may meet

the expectations of many people (at least, no doubt, those

whose only previous experience of programming was with Pascal,

or other languages with similar "implcit calling", such as

Visual Basic) -- but to me, it means the mere mention of an

object may EITHER mean a reference to the object, OR a call

to the object, depending on the object's type -- and in those

cases where I can't get a reference to the object by merely

mentioning it I will need to use explicit "give me a reference

to this, DON'T call it!" operators that aren't needed otherwise.

I feel this impacts the "first-classness" of functions (or

methods, or other callable objects) and the possibility of

interchanging objects smoothly. Therefore, to me, this specific

syntax difference is a serious black mark against Ruby -- but

I do understand why others would thing otherwise, even though

I could hardly disagree more vehemently with them:-).Below the syntax, we get into some important differences in

elementary semantics -- for example, strings in Ruby are

mutable objects (like in C++), while in Python they are not

mutable (like in Java, or I believe C#). Again, people who

judge primarily by what they're already familiar with may

think this is a plus for Ruby (unless they're familiar with

Java or C#, of course:-). Me, I think immutable strings are

an excellent idea (and I'm not surprised that Java, independently

I think, reinvented that idea which was already in Python), though

I wouldn't mind having a "mutable string buffer" type as well

(and ideally one with better ease-of-use than Java's own

"string buffers"); and I don't give this judgment because of

familiarity -- before studying Java, apart from functional

programming languages where _all_ data are immutable, all the

languages I knew had mutable strings -- yet when I first saw

the immutable-string idea in Java (which I learned well before

I learned Python), it immediately struck me as excellent, a

very good fit for the reference-semantics of a higher level

programming language (as opposed to the value-semantics that

fit best with languages closer to the machine and farther from

applications, such as C) with strings as a first-class, built-in

(and pretty crucial) data type.Ruby does have some advantages in elementary semantics -- for

example, the removal of Python's "lists vs tuples" exceedingly

subtle distinction. But mostly the score (as I keep it, with

simplicity a big plus and subtle, clever distinctions a notable

minus) is against Ruby (e.g., having both closed and half-open

intervals, with the notations a..b and a...b [anybody wants

to claim that it's _obvious_ which is which?-)], is silly --

IMHO, of course!). Again, people who consider having a lot of

similar but subtly different things at the core of a language

a PLUS, rather than a MINUS, will of course count these "the

other way around" from how I count them:-).Don't be misled by these comparisons into thinking the two

languages are _very_ different, mind you. They aren't. But

if I'm asked to compare "capelli d'angelo" to "spaghettini",

after pointing out that these two kinds of pasta are just

about undistinguishable to anybody and interchangeable in any

dish you might want to prepare, I would then inevitably have

to move into microscopic examination of how the lengths and

diameters imperceptibly differ, how the ends of the strands

are tapered in one case and not in the other, and so on -- to

try and explain why I, personally, would rather have capelli

d'angelo as the pasta in any kind of broth, but would prefer

spaghettini as the pastasciutta to go with suitable sauces for

such long thin pasta forms (olive oil, minced garlic, minced

red peppers, and finely ground anchovies, for example - but if

you sliced the garlic and peppers instead of mincing them, then

you should choose the sounder body of spaghetti rather than the

thinner evanescence of spaghettini, and would be well advised

to forego the achoview and add instead some fresh spring basil

[or even -- I'm a heretic...! -- light mint...] leaves -- at

the very last moment before serving the dish). Ooops, sorry,

it shows that I'm traveling abroad and haven't had pasta for

a while, I guess. But the analogy is still pretty good!-)So, back to Python and Ruby, we come to the two biggies (in

terms of language proper -- leaving the libraries, and other

important ancillaries such as tools and environments, how to

embed/extend each language, etc, etc, out of it for now -- they

wouldn't apply to all IMPLEMENTATIONS of each language anyway,

e.g., Jython vs Classic Python being two implementations of

the Python language!):1. Ruby's iterators and codeblocks vs Python's iterators

and generators;2. Ruby's TOTAL, unbridled "dynamicity", including the ability

to "reopen" any existing class, including all built-in ones,

and change its behavior at run-time -- vs Python's vast but

_bounded_ dynamicity, which never changes the behavior of

existing built-in classes and their instances.Personally, I consider [1] a wash (the differences are so

deep that I could easily see people hating either approach

and revering the other, but on MY personal scales the pluses

and minuses just about even up); and [2] a crucial issue --

one that makes Ruby much more suitable for "tinkering", BUT

Python equally more suitable for use in large production

applications. It's funny, in a way, because both languages

are so MUCH more dynamic than most others, that in the end

the key difference between them from my POV should hinge on

that -- that Ruby "goes to eleven" in this regard (the

reference here is to "Spinal Tap", of course). In Ruby,

there are no limits to my creativity -- if I decide that

all string comparisons must become case-insensitive, _I CAN

DO THAT_! I.e., I can dynamically alter the built-in string

class so that

a = "Hello World"

b = "hello world"

if a == b

print "equal!\n"

else

print "different!\n"

end

WILL print "equal". In python, there is NO way I can do

that. For the purposes of metaprogramming, implementing

experimental frameworks, and the like, this amazing dynamic

ability of Ruby is _extremely_ appealing. BUT -- if we're

talking about large applications, developed by many people

and maintained by even more, including all kinds of libraries

from diverse sources, and needing to go into production in

client sites... well, I don't WANT a language that is QUITE

so dynamic, thank you very much. I loathe the very idea of

some library unwittingly breaking other unrelated ones that

rely on those strings being different -- that's the kind of

deep and deeply hidden "channel", between pieces of code that

LOOK separate and SHOULD BE separate, that spells d-e-a-t-h

in large-scale programming. By letting any module affect the

behavior of any other "covertly", the ability to mutate the

semantics of built-in types is just a BAD idea for production

application programming, just as it's cool for tinkering.If I had to use Ruby for such a large application, I would

try to rely on coding-style restrictions, lots of tests (to

be rerun whenever ANYTHING changes -- even what should be

totally unrelated...), and the like, to prohibit use of this

language feature. But NOT having the feature in the first

place is even better, in my opinion -- just as Python itself

would be an even better language for application programming

if a certain number of built-ins could be "nailed down", so

I KNEW that, e.g., len("ciao") is 4 (rather than having to

worry subliminally about whether somebody's changed the

binding of name 'len' in the __builtins__ module...). I do

hope that eventually Python does "nail down" its built-ins.But the problem's minor, since rebinding built-ins is quite

a deprecated as well as a rare practice in Python. In Ruby,

it strikes me as major -- just like the _too powerful_ macro

facilities of other languages (such as, say, Dylan) present

similar risks in my own opinion (I do hope that Python never

gets such a powerful macro system, no matter the allure of

"letting people define their own domain-specific little

languages embedded in the language itself" -- it would, IMHO,

impair Python's wonderful usefulness for application

programming, by presenting an "attractive nuisance" to the

would-be tinkerer who lurks in every programmer's heart...).Alex

pip installs packages. Python packages.

pip is a replacement for easy_install. It uses mostly the same techniques for finding packages, so packages that were made easy_installable should be pip-installable as well.

If you use virtualenv – a tool for installing libraries in a local and isolated manner – you’ll automatically get a copy of pip. Free bonus!

Over the past year and a bit I've been trying to really 'grok' the functional way of programming computers. I've been doing it the 'other' (imperative) way for over 30 years and I figured it's time for a change, not because I expect to become suddenly super productive but simply to keep life interesting. I also hope that it will change my perspective on programming. Think of this piece as an intermediary report, a postcard from a nice village in functional programming land.

So far, it's been a rocky ride. I'm fortunate enough to have found a few people on HN that don't mind my endless questions on the subject, I must come across as a pretty block-headed person by now. But that won't stop me from continuing and from trying to learn more about this subject. RiderOfGiraffes and Mahmud deserve special mention.

My biggest obstacle is that I came to the world of programming through that primitive programming device called the soldering iron. Before learning how to program I used '74 series logic chips', which are all about state and changing state. Transitioning from logic chips to imperative programming is a reasonably small step. It never really occurred to me there was another way of doing things until about two years ago. This is a major shortcoming in myself I think, I find something that works, and then I'll just go crazy using it, not bother to look outside of my tool chest to see if there are better ways (or different ways) of doing things as soon as I find something that works.

The first thing that I ran in to was 'anonymous functions'. This was a bit of a misnomer I think, because after all, after ld and strip are done with your program all functions are anonymous, whether your language supported anonymous functions or not. So that threw me for a loop for a bit until I realized that we're talking about functions that simply have their body appear in the place where normally you'd call a function, and since they're only there once you don't need to 'name' them, hence the 'anonymous'.

So, for a 'die-hard' C programmer that has never seen an anonymous function in their whole life, that's the thing you wished you had when you call 'quicksort' with a function that is used only once to determine the ordering of two array elements. Every time you call quicksort you end up defining a 'compare' function for two elements, and that function is typically only used in that one spot. If C had had anonymous functions you could have coded it right in there but because it doesn't you need to create a named function outside of the quicksort call and then pass a pointer to that function.

What's not entirely obvious from the simplicity of having functions without names is how powerful a concept anonymous functions really are, I think this is one of the hardest concepts of functional programming to truly understand. Once you have anonymous functions and a few other essentials in your arsenal you can build just about any program, including recursive ones, even when at the language level your language does not explicitly support recursion. This is something that I never really properly understood until recently, after banging my head against the wall for a long long time. Math is not my strong suit, so to me 'functions' are bits of code that do something and then return a value. The whole concept of a function returning another function seemed alien and strange to me at first (and to some extent still does), but I'm getting more comfortable with it.

Next up is assignments. In imperative programming languages the first thing you learn about is assignment, in functional programming it is the last. It's quite amazing how far you can go in functional code without ever seeing 'the left hand side' of the expression. Typically in imperative code you'll see this pattern:

functiondefinition nameoffunction (bunch of parameter)

. somevariable = someexpression

. anothervariable = anotherexpression using somevariable

.

. [optionally someglobal = yetanotherexpression, print something or some other effect]

.

. return finalexpressionIt's a fairly simple affair, you start at the top, evaluate the expressions one by one, assign the results of the sub-expressions to temporary variables and then at the end you return your result. Side effects are propagated to other parts of the program by changing global state, doing output or other 'useful' operations. Every time you see that '=' sign you are changing state, either locally for local variables or with a greater scope. In functional programs it is also possible to 'change state' but you normally speaking will only do that very explicitly and very sparsely because you'll find that you don't need to.

The 'someglobal' assignment from the example above is called a side-effect, the function does more than just compute a value, it also modifies the state of the program in a way that is unpredictable from the calling functions point of view. It's basically as if the world outside the chain of functions has been influenced by the function call, instead of the function simply returning a value.

This is one of the easiest pitfalls of 'imperative' coding to fall in to, and it is closely related to spaghetti programming, modifying state left right and is a bad thing, just like 'goto' when used indiscriminately. Now, nothing stops you from programming in a 'functional style' in a language like C or some other 'imperative' language that does support function calls (and most languages do). This probably will have the side-effect (grin) of you spending less time debugging your code, plenty of time the subtle and invisible changes of state by code at a low level can be a source of some pretty mean bugs.

Because functional programming is all about functions calling other functions which compute values and so on you'll find that there is much less need to store intermediate results explicitly. You still need variables to pass parameters in to functions, but on the whole I find that if I re-write the same bit of code in a functional style that most of my 'local' variables simply disappear. I've been pushing this very far (probably too far) in a little test website that I built using that good old standby PHP, it is the last language on the planet to be described as 'functional', and yet, if you force yourself to concentrate on writing side-effect free code you can have a lot of fun by simply trying to avoid the '=' key on your keyboard.

Of course, in the end the only way a computer can do anything useful is to change state somewhere so eventually you'll have to 'give in' and make an assignment to store the result of all the work the functions have done but it's definitely an eye opener to see how far you can get with this side-effect free code.

Evaluating functional code is very hard when all you've seen your whole programming career long is imperative code. Another stumbling block on the path to enlightenment you will run in to, for a person that already knows how to program 'imperatively', is to unlearn years of conditioning on how to simply read a program. You have to train yourself to start the understanding of code you're looking at from the innermost expressions, which are the first to be evaluated. This is hard because you've conditioned yourself to read code like you would read a book, from the top to the bottom, and from the left to the right.

We like to think of computers as 'automated humans reading a text and executing instructions line-by-line', all this conditioning can be a significant obstacle, you have to first 'unlearn' that habit, which can be quite hard. And 'all the bloody parentheses' don't make that easier, it's sometimes hard to see the wood for the trees. I feel that I'm slowly getting better at this, but it certainly doesn't feel natural just yet.

So far this intermediary report on the travels in the land of functional programming. The natives are nice, the museums contain interesting exhibitions, I think I'll hang around here for a bit longer to see what else I can find.

Maybe I'll even settle here, if I find that I can do what I am already able to do in a way that is more fun, more reliable or more productive, or any combination of those. But that's still a long way off.

I was about to start learning Maven, when I discovered buildr. XML makes me dizzy. buildr's syntax is so much more 2010.

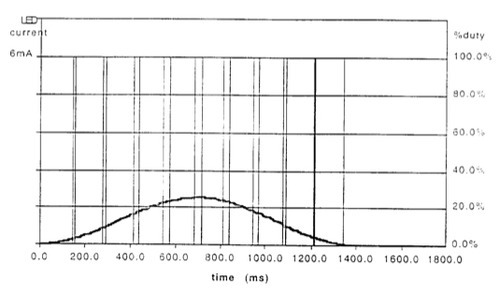

In July 2002, Appled filed a patent for a “Breathing Status LED Indicator” (No. US 6,658,577 B2). They described it as a “blinking effect of the sleep-mode indicator in accordance with the present invention mimics the rhythm of breathing which is psychologically appealing.”

The average respiratory rate for adults is 12-20 breathes per minute, which is the rate that the sleep-indicator light fades in and out on most Apple laptops. Older models such as the Macintosh PowerBook, however, use a blinking LED indicator, with discrete pulses in one-second intervals.

The other day, I noticed that my friend’s Dell laptop had a similar feature but with a shorter fade-in-fade-out period. Its rate was around 40 blinks per second, or the average respiratory rate for adults during strenuous exercise—not very indicative of something in sleep-mode.

It’s interesting how a lot of companies try to copy Apple but never seem to get it right. This is yet another example of Apple’s obsessive attention to detail.

Now if you don’t mind, I’m going to stare at the gentle, soothing light on my Macbook Pro.

While investigating GWT, I came across this comment.

I do not recommend GWT, because I had 1 year experience with that : List of GWT disadvantages: -simplest thing to be done requires 4-5 java classes with complex relationships -UI work fully concentrated in hands of very experienced java programmer, not simple -UI java programmer should also be aware of server side affairs very well, so hire 5 years experience programmer and GWT might become more friendly -not friendly for SEO -heavy dependence on Javascript, really it’s pure javascript, with all disadvantages of this insecure and problem-making technology -seems to create memory leaks in browsers -compilation time is very long -requires your custom effort to integrate ORM or data layer -there is no clear separation between work of web-master, server side java programmer and art-designer. It seems art and presentation layer workers should access java code with good confidence.

List of good things: -testing is quite simple

I recommend Jboss Seam as a much more mature and thoughtful solution, including several UI options.

I was reading a post regarding polymorphism when I realized I totally forgot how to create a compiler. Time to review yacc, Lex, and picking up new things like PLY (a neat Python module that combines a lexer and a parser generator).

Apple's Safari Extensions Gallery went live today, and unveiled a few dozens extensions. Unsurprisingly, a few big players were invited to the party, and eBay, twitter, Amazon and Microsoft even took four out of the six slots as the featured extensions. The other unsurprising fact is Google is missing the fun. I know they have their own browser, and so does Microsoft. Either old friends have turn their backs on each other, or someone is becoming more and more arrogant.